AI Is Running Out of Data

To feed the leviathan of AI it needs an endless stream of data, but it's a finite resource.

Artificial intelligence requires a lot of resources, not the least of which is data to help create its learning infrastructure and spit out everything from the wrong answer on Google to driving increased crop yields to help feed the globe. We often focus on the outputs of AI, but in reality the inputs are just as (if not more) important.

And while it may feel that the data we produce daily is infinite, the reality is that it’s not. The funny pictures we make on DALL-E require insane amounts of data to train the neural networks to make pictures of anything we could imagine. And the AI companies are running out of it.

All Your Data Are Belong to Me

It’s not simply that data is finite - that would be too easy of an explanation. The layer under that is a bit more complex. The data that companies like OpenAI want to train their machines on isn’t all publicly available due to a variety of resources implemented by publishers and other owners of web-based data on the open internet, which has traditionally been AI companies’ main source of training data.

One of these resources is admittedly low-tech: robots.txt. This small piece of code on a web page dictates which web crawlers are allowed to scrape a particular page for information. Originally meant to prevent inadvertent web crawler DDOS attacks on web pages, it’s also now being used to tell major databases that scrape this information to back off (including this very newsletter.)

Other strategies include putting content behind paywalls or explicitly laying out usage rights in a site’s terms of service (this newsletter imagines the former is more effective than the latter.) When these don’t work, you can always sue.

While we’ve spoken about the value proposition of giving your data away for free before, there really isn’t a symbiotic exchange when it comes to letting AI networks scrape your data. The value prop for content creators on the web to give away their work to companies to train AI models is firmly one-sided, and dear reader it is not weighted towards the humans creating the content.

So while these AI companies may be concerned about hitting a “data wall” when it comes to training their models, those who contribute to the open web are under no obligation to ensure access to their content to companies who most certainly will not share the profits with the data sources. In fact, the models the companies are creating are a threat to this kind of work as opposed to driving further value for it.

So Make It Up

One way these generative AI models are trying to get around this decreasing data access is to simply create their own data to feed their models. Taking a break from generating awful food images for Uber Eats, some generative AI shops have turned to synthetic data to try to plug the gap left behind by a shrinking open web data crawl-a-thon.

The theory makes sense: if these companies can generate the kinds of dupes that have lawyers putting fake cases in their briefs, then there’s plenty of opportunity for them to simply create data to re-ingest into their systems to keep the data flow going. After all, the outputs of artificial intelligence models are supposed to mimic real life as closely as possible; why not simply feed those realistic outputs back into the model infrastructure that created it?

To better understand this, we should take a trip to the 17th century and the leading monarchical empires of the time: the Hapsburgs. This family was ruling the Spanish empire until they took the concept of keeping it within the family a bit too far, resulting ultimately in the birth of Charles II, who was so hobbled by genetic diseases exacerbated by generations of inbreeding that his ability to rule was essentially non-existent and he was unable (mercifully) to continue the lineage, which ended with his death in 1700. While that sentence ran on, the Spanish Hapsburgs did not.

The kind of consanguinity that led to their downfall also has the potential to take down neural networks relying on synthetic - essentially inbred - data to continue evolving their models.

The New York Times took a deep dive into what happens when AI outputs are used as inputs for further model training. As the generations of training came and went, the outputs got less and less relevant. Eventually, the outputs simply put out the same thing over and over, essentially becoming a late-born Hapsburg overseeing the decline of a once-thriving empire.

And the problem goes beyond AI companies purposefully using output as input to feed the insatiable appetite of their models. Artificial intelligence outputs on the web (including images on this very newsletter - though, crucially, not text) are becoming more commonplace, meaning that those bots that continue to scrape the open web for content are inadvertently taking in AI outputs as inputs, which is muddying the metaphorical gene pool. They’re not trying to do this, but are having trouble avoiding it due to its increasing prevalence. It’s like dating in Iceland.

So What Does That Mean for the Future?

Companies like Anthropic and OpenAI aren’t doomed to provide the same output over and over thanks to model collapse due to synthetic data. But this slowdown in raw data consumption has implications for the larger AI market.

It puts newcomers at a disadvantage. If the insane valuations and major partnerships weren’t enough of a barrier to entry for upstart AI companies, the closing off of the free data buffet that was the open web will make that fence even higher. It’s a tragedy of the commons, if the cows were machines that ate all the grass and tried to replace the cows but did so poorly at best.

It reignites the debate about data ownership and value. Who owns open web data? What is the value exchange between a content creator and someone like an OpenAI or Anthropic? Who, at the end of the day, is responsible for ensuring fairness in this space? All questions being debated but clear as mud today.

The pace we have seen AI utterly explode may need to cool down. Take OpenAI, for example. GPT was released in 2018. In the past six years, the difference between what GPT is and what GPT 4-o is cannot be understated in its extremes. The question is, can this pace continue with the spigot turning the wrong way on free data? And, somewhat more crucially, will the models remain as widespread and affordable now that a lot of the quality data these companies need come with a new cost?

The only certainly about AI is that the availability of free data to train these machines - that so far have made very few people a lot of money - has changed. Time will tell how much changes for the end-consumer.

Grab Bag Sections

WTF GCT: I’m a Westchester commuter, which means I have the absolute privilege of avoiding Penn Station and beginning my day in the city in one of the gems of the global transit world: Grand Central Station. There’s a reason tourists flock to it, blocking the path of the New Yorkers with places to be: it is absolutely gorgeous.

This good feeling dissipates on my walk to the 4/5/6, and lately it has been gone by the time I turn onto the Lexington Passage and see the construction plywood up blocking the stairs down to the subway. For the entirety of this summer, the majority of the stairs have been blocked off going to the subway and chaos has reigned.

So my relief was palpable when I took my post-Labor Day stroll past Warby’s and felt what I imagine thirsty desert dwellers feel when they see an oasis: elation at the sight of fully functional staircases - including the escalators! - down to the subway. My elation turned to excitement to see what improvements had been made for the massive inconvenience from the summer.

Dear reader, I am a detail-oriented individual. I pride myself on my situational awareness. I like to take in details like a sponge. And I cannot tell you what the MTA spent its money on with these closures. Did they replace the escalator? Maybe, but it already looks worn. Did they shore up the stairs? They look the same. As I stood on the escalator this morning (in classic fashion, the slow walkers have already firmly settled in to the left side of it, blocking those of us with places to be) I strained my eyes to find something - anything! - that looked new. I was disappointed.

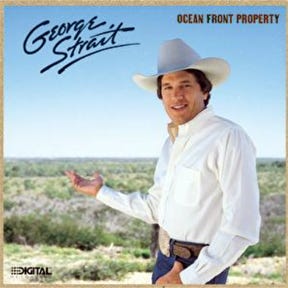

Album of the Week: I am not a country boy by any stretch of the imagination, but you have to hand it to one of the most prolific country singers in the game: the man has hits. George Strait’s Ocean Front Property contains one of his most famous tracks, and it’s this week’s album.

“All My Exes Live in Texas” is, as much as a country song can be, a bop. Its crossover appeal is evident in a Drake name drop, which might be the biggest thing for hip hop and country since Nelly’s ill-advised attempt at further his audience. The chorus in the title track is electric (again, as electric as country can be.) And while a song about depression, “Am I Blue” is quite upbeat- and shoutout to the piano work at the end.

The problem with being as prolific as Mr. Strait is, his best songs are spread across many, many albums. That leaves a lot of the tracks on his albums relatively forgettable. His greatest hits compilations slap, but I find it hard to point to a single album that is a must-listen through and through. Ocean Front Property comes about as close as possible for that.

Quote of the Week: “Language is the infinite use of finite means.” - Wilhelm von Humboldt